The merging of neurofeedback technologies and Generative AI holds the potential to reshape education by turning attention into a measurable commodity – how does this affect what it means to learn, and teach?

GenAI can now observe pupils and analyse their discourse. It seems we are moving towards a future where it not only observes pupils but actively measures their attentiveness, quantifies their engagement, and seeks to shape the very nature of how they learn. Recent research is uncovering how neuroscience, neurofeedback technologies, and GenAI are transforming attention into a measurable, commodifiable asset. As educators, how should we navigate the ethical labyrinth that arises when attention—once a personal, cultivated skill—becomes something to be monitored, manipulated, and monetised? How does this relate to the rights of the child?

Attention and Its Implications in Teaching

The ‘new science of education’ made a significant impact on teacher education after Dylan Wiliam’s tweet in 2017. Since then, it has gained immense momentum, sparking debates on social media and within academia. Attention has become the golden currency of this new science, rapidly achieving the status of a gold standard. You may recall the viral video of Pritesh Raichura, which ignited widespread discussion in the sector and seemed to provoke a Marmite response—you either love it or hate it. So why has attention become such a hot topic and led to such extreme reactions? To answer this, we need to examine its concerning connection to neurofeedback training—also known as brain training—and explore how GenAI could play a pivotal role in the system.

Until the last ten years or so, cognitive science was largely kept outside of the educational domain. But in 2023, in response to growing interest, the Core Content Framework (CCF) was introduced for initial teacher education. The introduction of the Core Content Framework (CCF) and the soon-to-be ITTECF brought with it a requirement for all providers to educate trainees on the theory and application of cognitive science. The more I work with trainees and explore the fundamentals of cognitive load theory (Sweller, 1988), the more I recognise that attention is the key hinge point. The connection between what the teacher feels they ‘do’ and what the learner ‘does’.

Attention is a complex field of study, one that the CCF and ITTECF heavily simplify. However, a clear aim emerges: attention is treated as a commodity to be maximised in order to achieve long-term information retention. I propose that attention serves as the connecting mechanism for two otherwise predominantly closed systems: the external world (the classroom) and the internal world (the learner). In this light, attention could be likened to the role of gravity in brane theory: it permeates and connects otherwise distinct systems. Its effects are felt in and across each system, but the connection is not always tangible or observable.

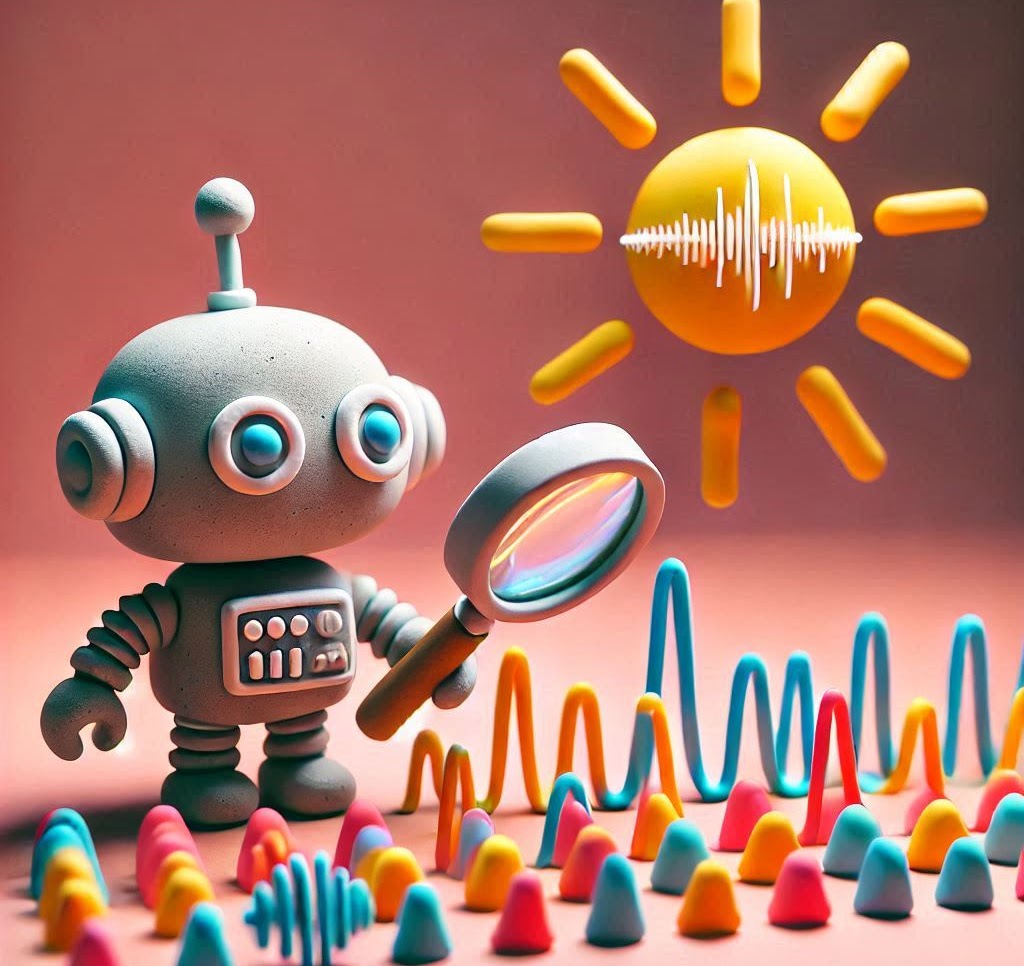

Generative AI offers new possibilities for supporting this dynamic. By gathering real-time data from classrooms and operationalising advancements in neurofeedback technology, GenAI could provide personalised insights into students’ attentional patterns. AI-driven adjustments could ensure that key moments of instruction align with students’ peak cognitive receptivity, thereby enhancing memory retention and engagement. But is this ethical? How does this relate to the free will of the pupil? Is this the responsibility of the teacher?

Navigating Challenges and Ethical Considerations

While the integration of GenAI and neurofeedback tools offers exciting possibilities, educators must address the accompanying ethical concerns. Monitoring and measuring attention could shift the focus from cultivating student autonomy to prioritising compliance and control. If attention becomes a commodity to be technologically quantified, are we at risk of reducing students to data points and Fourier transforms?

A major concern is the commercialisation of attention-focused devices. As highlighted in a recent paper by Kotouza, D., Pickersgill, M., Jessica Pykett, & Williamson, B. (2025), neurofeedback technologies and EEG devices have already been marketed for use in classrooms, with some targeting “students with high numbers of disciplinary ‘office referrals’” to improve their concentration. How should educators respond when faced with the growing pressure to adopt such tools? What safeguards are needed to prevent data exploitation, bias in AI algorithms, and breaches of privacy?

Attention should be cultivated as both a skill and a choice. Using the marriage of neurofeedback and GenAI systems has the potential to remove or heavily reduce this choice. The UN Convention on the Rights of the Child (UNCRC) states that every child has the right to:

- Relax and play

- Freedom of expression

- Be safe from violence

- An education

- Protection of identity

- Sufficient standard of living

- Know their rights

- Health and health services

There are multiple aspects of this list which are at risk through the use of systems that aim to maximise attention.

So, where’s the line between control exhibited by a teacher, and control exhibited by a machine? Pritesh’s video maximises attention skilfully – how does this compare to a neurofeedback-GenAI system which effectively does the same?

We are currently in a pivotal period of change and ethical debate – what does it mean to be a teacher in this context of neurofeedback and GenAI and how can these tools enhance education without compromising the agency, privacy, and humanity of learners?

In conclusion, the convergence of neurofeedback, GenAI, and education offers both promise and peril. As educators, we must critically evaluate whether these technologies truly serve the learner or merely reduce them to data-driven outputs. The potential to enhance attention and engagement is undeniable, but so too is the risk of undermining student agency and the foundational principles of education.

The question remains: how can we harness these advancements responsibly, ensuring they enhance teaching rather than challenging humanity at its core? As we grapple with this pivotal moment in educational evolution, the choices we make today will shape not only the future of teaching but the very essence of what it means to learn.

References

- Department for Education (2019) Core Content Framework. Available at: https://www.gov.uk/government/publications/initial-teacher-training-itt-core-content-framework (Accessed: 22 March 2025).

- Department for Education (2024) Initial Teacher Training and Early Career Framework (ITTECF). Available at: https://assets.publishing.service.gov.uk/media/661d24ac08c3be25cfbd3e61/Initial_Teacher_Training_and_Early_Career_Framework.pdf (Accessed: 22 March 2025).

- Kotouza, D., Pickersgill, M., Pykett, J., & Williamson, B. (2025) ‘Attention as an object of knowledge, intervention and valorisation: exploring data-driven neurotechnologies and imaginaries of intensified learning’, Critical Studies in Education, 1–20. Available at: https://doi.org/10.1080/17508487.2025.2469673..

- Raichura, P. (2025) Posts on X. Available at: https://x.com/Mr_Raichura (Accessed: 22 March 2025).

- Sweller, J. (1988) ‘Cognitive load during problem solving: Effects on learning’, Cognitive Science, 12(2), pp. 257–285.

- UNICEF UK (2022) UN Convention on the Rights of the Child. Available at: https://www.unicef.org.uk/what-we-do/un-convention-child-rights/ (Accessed: 22 March 2025).Wiliam, D. (2017) Tweet on X. Available at: https://x.com/dylanwiliam/status/824682504602943489 (Accessed: 22 March 2025).